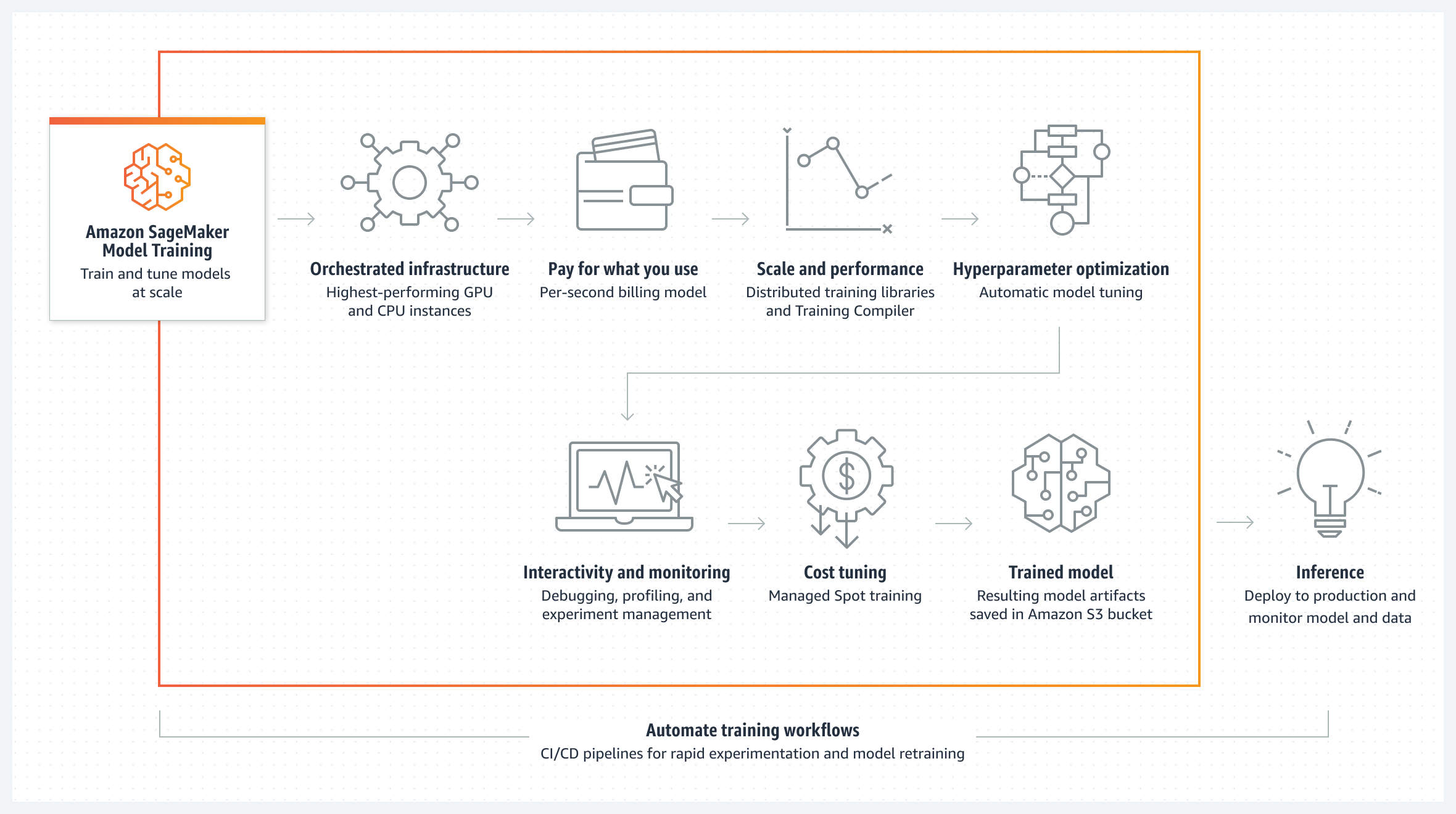

Amazon SageMaker Model Training

Train ML models quickly and cost effectively with Amazon SageMaker

Managed infrastructure for large-scale and cost-effective training

High-performance distributed training at scale

Built-in tools for the highest accuracy and monitoring

Amazon SageMaker Model Training reduces the time and cost to train and tune machine learning (ML) models at scale without the need to manage infrastructure. You can take advantage of the highest-performing ML compute infrastructure currently available, and SageMaker can automatically scale infrastructure up or down, from one to thousands of GPUs. Since you pay only for what you use, you can manage your training costs more effectively. To train deep learning models faster, SageMaker distributed training libraries can automatically split large models and training datasets across AWS GPU instances, or you can use third-party libraries, such as DeepSpeed, Horovod, or Megatron.

Fully managed infrastructure at scale

Broad choice of hardware

Efficiently manage system resources with a wide choice of GPUs and CPUs including P4d.24xl instances, which are the fastest training instances currently available in the cloud.

Easy setup and scale

Specify the location of data, indicate the type of SageMaker instances, and get started with a single click. SageMaker can automatically scale infrastructure up or down, from one to thousands of GPUs.

High-performance distributed training

Distributed training libraries

With only a few lines of code, you can add either data parallelism or model parallelism to your training scripts. SageMaker makes it faster to perform distributed training by automatically splitting your models and training datasets across AWS GPU instances.

Training Compiler

Amazon SageMaker Training Compiler can accelerate training by up to 50 percent through graph- and kernel-level optimizations that use GPUs more efficiently.

Built-in tools for the highest accuracy and lowest cost

Automatic model tuning

SageMaker can automatically tune your model by adjusting thousands of algorithm parameter combinations to arrive at the most accurate predictions, saving weeks of effort.

Managed Spot training

SageMaker helps reduce training costs by up to 90 percent by automatically running training jobs when compute capacity becomes available. These training jobs are also resilient to interruptions caused by changes in capacity.

Built-in tools for interactivity and monitoring

Debugger and profiler

Amazon SageMaker Debugger captures metrics and profiles training jobs in real time, so you can quickly correct performance issues before deploying the model to production.

Experiment management

Amazon SageMaker Experiments captures input parameters, configurations, and results, and it stores them as experiments to help you track ML model iterations.

Amazon SageMaker with TensorBoard

Amazon SageMaker with TensorBoard helps you to save development time by visualizing the model architecture to identify and remediate convergence issues, such as validation loss not converging or vanishing gradients.

Full customization

SageMaker comes with built-in libraries and tools to make model training easier and faster. SageMaker works with popular open-source ML models such as GPT, BERT, and DALL·E; ML frameworks, such as PyTorch and TensorFlow; and transformers, such as Hugging Face. With SageMaker, you can use popular open source libraries and tools, such as DeepSpeed, Megatron, Horovod, Ray Tune, and TensorBoard, based on your needs.

Accelerate local ML code conversion to training jobs

Amazon SageMaker Python SDK helps you execute ML code authored in your preferred IDE and local notebooks along with the associated runtime dependencies as large-scale ML model training jobs with minimal code changes. You only need to add a line of code (Python decorator) to your local ML code. SageMaker Python SDK takes the code along with the datasets and workspace environment setup and runs it as a SageMaker Training job.

Automated ML training workflows

Automating training workflows helps you create a repeatable process to orchestrate model development steps for rapid experimentation and model retraining. You can automate the entire model build workflow, including data preparation, feature engineering, model training, model tuning, and model validation, using Amazon SageMaker Pipelines. You can configure SageMaker Pipelines to run automatically at regular intervals or when certain events are initiated, or you can run them manually as needed.

Customer success

LG AI Research aims to lead the next era of AI by using Amazon SageMaker to train and deploy ML models faster.

“We recently debuted Tilda, the AI artist powered by EXAONE, a super giant AI system that can process 250 million high-definition image-text pair datasets. The multi-modality AI allows Tilda to create a new image by itself, with its ability to explore beyond the language it perceives. Amazon SageMaker was essential in developing EXAONE, because of its scaling and distributed training capabilities. Specifically, due to the massive computation required to train this super giant AI, efficient parallel processing is very important. We also needed to continuously manage large-scale data and be flexible to respond to newly acquired data. Using Amazon SageMaker model training and distributed training libraries, we optimized distributed training and trained the model 59% faster—without major modifications to our training code.”

Seung Hwan Kim, Vice President and Vision Lab Leader, LG AI Research

“At AI21 Labs we help businesses and developers use cutting-edge language models to reshape how their users interact with text, with no NLP expertise required. Our developer platform, AI21 Studio, provides access to text generation, smart summarization and even code generation, all based on our family of large language models. Our recently trained Jurassic-Grande (TM) model with 17 billion parameters was trained using Amazon SageMaker. Amazon SageMaker made the model training process easier and more efficient, and worked perfectly with DeepSpeed library. As a result, we were able to scale the distributed training jobs easily to hundreds of Nvidia A100 GPUs .The Grande model provides text generation quality on par with our much larger 178 billion parameter model, at a much lower inference cost. As a result, our clients deploying Jurassic-Grande in production are able to serve millions of real-time users on a daily basis, and enjoy the advantage of the improved unit economics without sacrificing user experience.”

Dan Padnos, Vice President Architecture, AI21 Labs

With the help of Amazon SageMaker and the Amazon SageMaker distributed data parallel (SMDDP) library, Torc.ai, an autonomous vehicle leader since 2005, is commercializing self-driving trucks for safe, sustained, long-haul transit in the freight industry.

“My team is now able to easily run large-scale distributed training jobs using Amazon SageMaker model training and the Amazon SageMaker distributed data parallel (SMDDP) library, involving terabytes of training data and models with millions of parameters. Amazon SageMaker distributed model training and the SMDDP have helped us scale seamlessly without having to manage training infrastructure. It reduced our time to train models from several days to a few hours, enabling us to compress our design cycle and bring new autonomous vehicle capabilities to our fleet faster than ever.”

Derek Johnson, Vice President of Engineering, Torc.ai

Sophos, a worldwide leader in next-generation cybersecurity solutions and services, uses Amazon SageMaker to train its ML models more efficiently.

“Our powerful technology detects and eliminates files cunningly laced with malware. Employing XGBoost models to process multiple-terabyte-sized datasets, however, was extremely time-consuming—and sometimes simply not possible with limited memory space. With Amazon SageMaker distributed training, we can successfully train a lightweight XGBoost model that is much smaller on disk (up to 25 times smaller) and in memory (up to five times smaller) than its predecessor. Using Amazon SageMaker automatic model tuning and distributed training on Spot Instances, we can quickly and more effectively modify and retrain models without adjusting the underlying training infrastructure required to scale out to such large datasets.”

Konstantin Berlin, Head of Artificial Intelligence, Sophos

"Aurora’s advanced machine learning and simulation at scale are foundational to developing our technology safely and quickly, and AWS delivers the high performance we need to maintain our progress. With its virtually unlimited scale, AWS supports millions of virtual tests to validate the capabilities of the Aurora Driver so that it can safely navigate the countless edge cases of real-world driving."

Chris Urmson, CEO, Aurora

"We use computer vision models to do scene segmentation, which is important for scene understanding. It used to take 57 minutes to train the model for one epoch, which slowed us down. Using Amazon SageMaker’s data parallelism library and with the help of the Amazon ML Solutions Lab, we were able to train in 6 minutes with optimized training code on 5ml.p3.16xlarge instances. With the 10x reduction in training time, we can spend more time preparing data during the development cycle."

Jinwook Choi, Senior Research Engineer, Hyundai Motor Company

“At Latent Space, we're building a neural-rendered game engine where anyone can create at the speed of thought. Driven by advances in language modeling, we're working to incorporate semantic understanding of both text and images to determine what to generate. Our current focus is on utilizing information retrieval to augment large-scale model training, for which we have sophisticated ML pipelines. This setup presents a challenge on top of distributed training since there are multiple data sources and models being trained at the same time. As such, we're leveraging the new distributed training capabilities in Amazon SageMaker to efficiently scale training for large generative models.”

Sarah Jane Hong, Cofounder/Chief Science Officer, Latent Space

“Musixmatch uses Amazon SageMaker to build natural language processing (NLP) and audio processing models and is experimenting with Hugging Face with Amazon SageMaker. We choose Amazon SageMaker because it allows data scientists to iteratively build, train, and tune models quickly without having to worry about managing the underlying infrastructure, which means data scientists can work more quickly and independently. As the company has grown, so too have our requirements to train and tune larger and more complex NLP models. We are always looking for ways to accelerate training time while also lowering training costs, which is why we are excited about Amazon SageMaker Training Compiler. SageMaker Training Compiler provides more efficient ways to use GPUs during the training process and, with the seamless integration between SageMaker Training Compiler, PyTorch, and high-level libraries like Hugging Face, we have seen a significant improvement in training time of our transformer-based models going from weeks to days, as well as lower training costs.”

Loreto Parisi, Artificial Intelligence Engineering Director, Musixmatch

Resources

Train 175+ billion parameter NLP models with model parallel additions and Hugging Face on Amazon SageMaker.

AWS re:Invent 2022 - Train ML models at scale with Amazon SageMaker, featuring AI21 Labs

Download SageMaker model training and tuning code samples from the GitHub repository.

Train gigantic models with near-linear scaling using sharded data parallelism on Amazon SageMaker

Improve price performance of your model training using Amazon SageMaker heterogenous clusters

Follow the step-by-step tutorial to learn how to train a model using SageMaker.

In this hands-on lab, learn how to use SageMaker to build, train, and deploy an ML model.

Get started building with SageMaker in the AWS Management Console.